Architecture

Nomad is a complex system that has many different pieces. To help both users and developers of Nomad build a mental model of how it works, this page documents the system architecture.

Advanced Topic! This page covers technical details of Nomad. You do not need to understand these details to effectively use Nomad. The details are documented here for those who wish to learn about them without having to go spelunking through the source code.

Glossary

Before describing the architecture, we provide a glossary of terms to help clarify what is being discussed:

Job

A Job is a specification provided by users that declares a workload for Nomad. A Job is a form of desired state; the user is expressing that the job should be running, but not where it should be run. The responsibility of Nomad is to make sure the actual state matches the user desired state. A Job is composed of one or more task groups.

Task Group

A Task Group is a set of tasks that must be run together. For example, a web server may require that a log shipping co-process is always running as well. A task group is the unit of scheduling, meaning the entire group must run on the same client node and cannot be split.

Driver

A Driver represents the basic means of executing your Tasks. Example Drivers include Docker, QEMU, Java, and static binaries.

Task

A Task is the smallest unit of work in Nomad. Tasks are executed by drivers, which allow Nomad to be flexible in the types of tasks it supports. Tasks specify their driver, configuration for the driver, constraints, and resources required.

Client

A Nomad client is an agent configured to run and manage tasks using available compute resources on a machine. The agent is responsible for registering with the servers, watching for any work to be assigned and executing tasks. The Nomad agent is a long lived process which interfaces with the servers.

Allocation

An Allocation is a mapping between a task group in a job and a client node. A single job may have hundreds or thousands of task groups, meaning an equivalent number of allocations must exist to map the work to client machines. Allocations are created by the Nomad servers as part of scheduling decisions made during an evaluation.

Evaluation

Evaluations are the mechanism by which Nomad makes scheduling decisions. When either the desired state (jobs) or actual state (clients) changes, Nomad creates a new evaluation to determine if any actions must be taken. An evaluation may result in changes to allocations if necessary.

Deployment

Deployments are the mechanism by which Nomad rolls out changes to cluster state

in a step-by-step fashion. Deployments are only available for Jobs with the type

service. When an Evaluation is processed, the scheduler creates only the

number of Allocations permitted by the update block and the current state

of the cluster. The Deployment is used to monitor the health of those

Allocations and emit a new Evaluation for the next step of the update.

Server

Nomad servers are the brains of the cluster. There is a cluster of servers per region and they manage all jobs and clients, run evaluations, and create task allocations. The servers replicate data between each other and perform leader election to ensure high availability. More information about latency requirements for servers can be found in Network Topology.

Regions

Nomad models infrastructure as regions and datacenters. A region will contain one or more datacenters. A set of servers joined together will represent a single region. Servers federate across regions to make Nomad globally aware.

Datacenters

Nomad models a datacenter as an abstract grouping of clients within a region. Nomad clients are not required to be in the same datacenter as the servers they are joined with, but do need to be in the same region. Datacenters provide a way to express fault tolerance among jobs as well as isolation of infrastructure.

Node

A more generic term used to refer to machines running Nomad agents in client mode. Unless noted otherwise, it may be used interchangeably with client.

Node Pool

Node pools are used to group nodes and can be used to restrict which jobs are able to place allocations in a given set of nodes. Example use cases for node pools include segmenting nodes by environment (development, staging, production), by department (engineering, finance, support), or by functionality (databases, ingress proxy, applications).

Bin Packing

Bin Packing is the process of filling bins with items in a way that maximizes the utilization of bins. This extends to Nomad, where the clients are "bins" and the items are task groups. Nomad optimizes resources by efficiently bin packing tasks onto client machines.

High-Level Overview

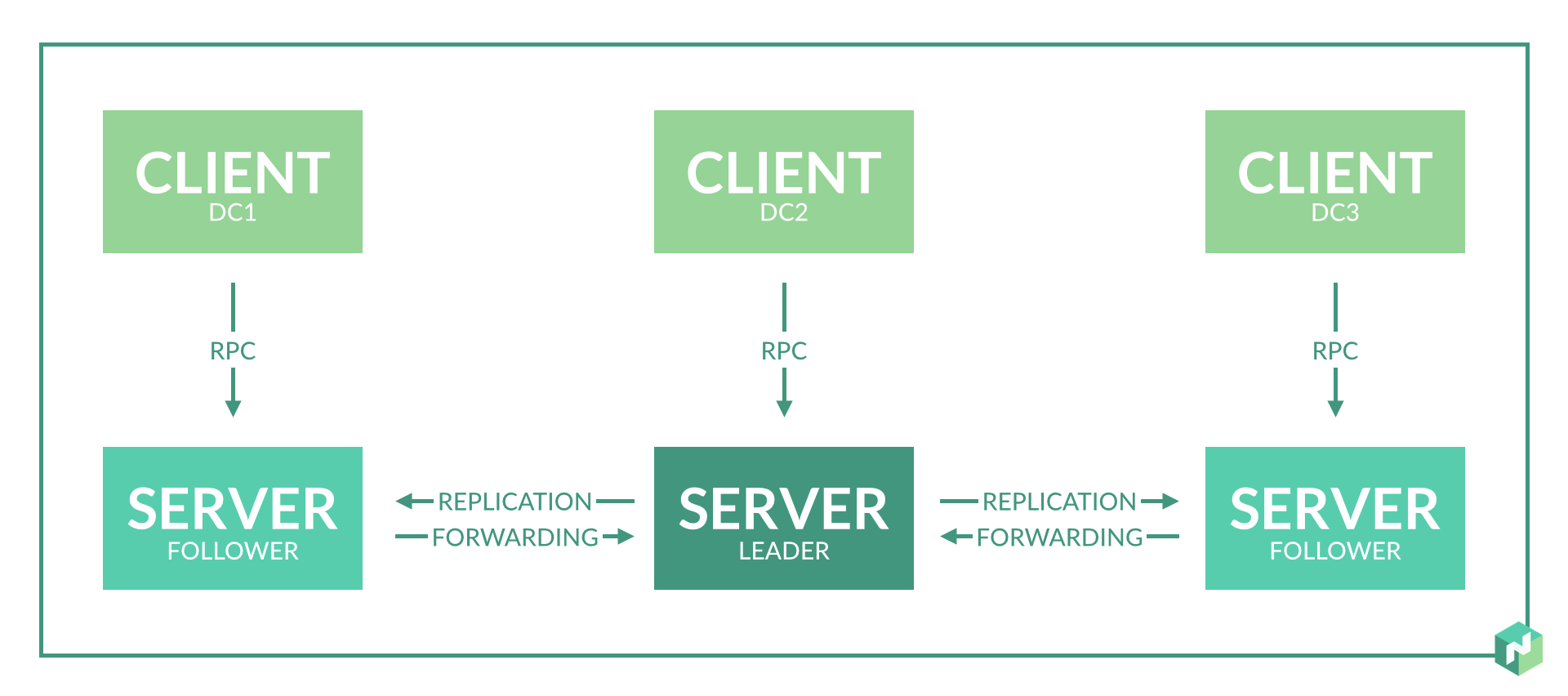

Looking at only a single region, at a high level Nomad looks like this:

Within each region, we have both clients and servers. Servers are responsible for accepting jobs from users, managing clients, and computing task placements. Each region may have clients from multiple datacenters, allowing a small number of servers to handle very large clusters.

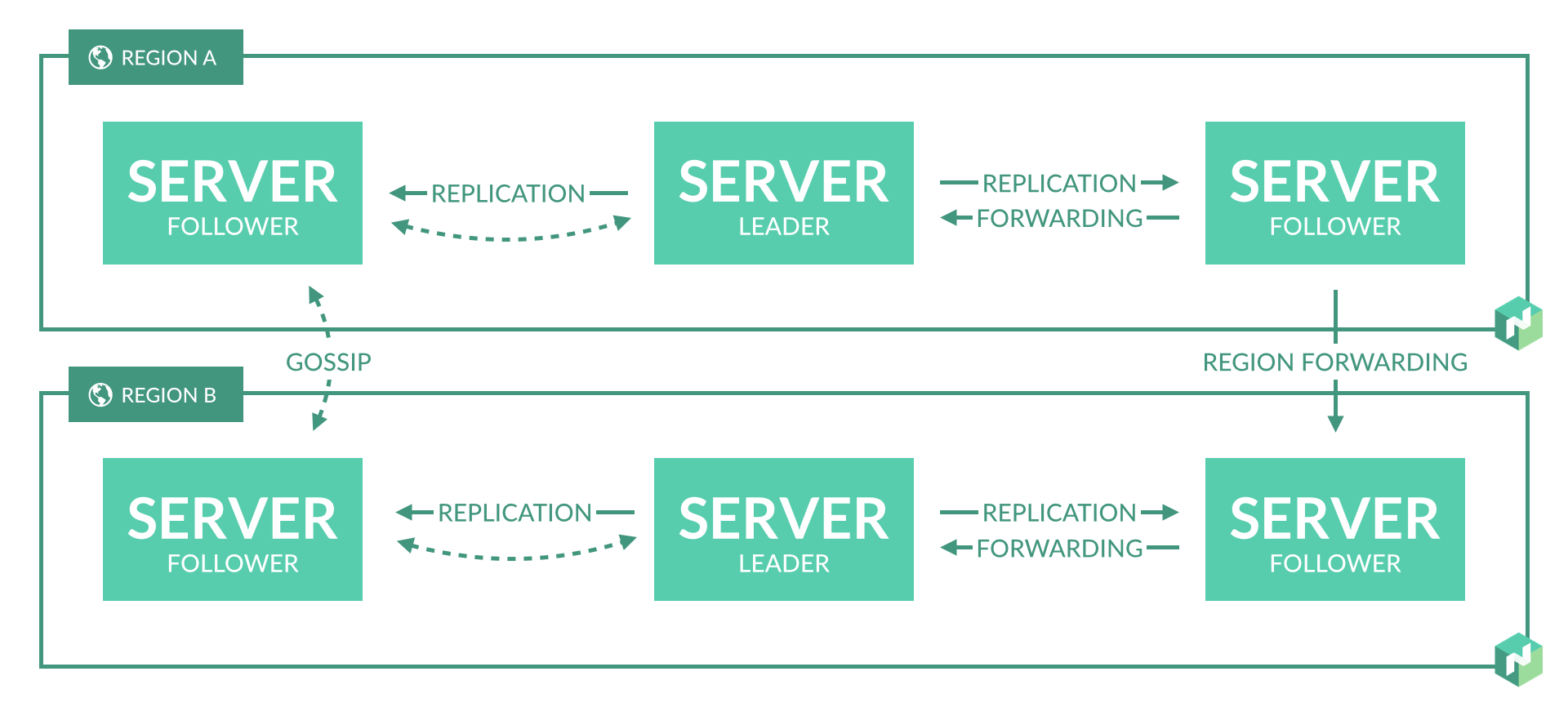

In some cases, for either availability or scalability, you may need to run multiple regions. Nomad supports federating multiple regions together into a single cluster. At a high level, this setup looks like this:

Regions are fully independent from each other, and do not share jobs, clients, or state. They are loosely-coupled using a gossip protocol, which allows users to submit jobs to any region or query the state of any region transparently. Requests are forwarded to the appropriate server to be processed and the results returned. Data is not replicated between regions.

The servers in each region are all part of a single consensus group. This means that they work together to elect a single leader which has extra duties. The leader is responsible for processing all queries and transactions. Nomad is optimistically concurrent, meaning all servers participate in making scheduling decisions in parallel. The leader provides the additional coordination necessary to do this safely and to ensure clients are not oversubscribed.

Each region is expected to have either three or five servers. This strikes a balance between availability in the case of failure and performance, as consensus gets progressively slower as more servers are added. However, there is no limit to the number of clients per region.

Clients are configured to communicate with their regional servers and communicate using remote procedure calls (RPC) to register themselves, send heartbeats for liveness, wait for new allocations, and update the status of allocations. A client registers with the servers to provide the resources available, attributes, and installed drivers. Servers use this information for scheduling decisions and create allocations to assign work to clients.

Users make use of the Nomad CLI or API to submit jobs to the servers. A job represents a desired state and provides the set of tasks that should be run. The servers are responsible for scheduling the tasks, which is done by finding an optimal placement for each task such that resource utilization is maximized while satisfying all constraints specified by the job. Resource utilization is maximized by bin packing, in which the scheduling tries to make use of all the resources of a machine without exhausting any dimension. Job constraints can be used to ensure an application is running in an appropriate environment. Constraints can be technical requirements based on hardware features such as architecture and availability of GPUs, or software features like operating system and kernel version, or they can be business constraints like ensuring PCI compliant workloads run on appropriate servers.

Client Organization

When the Nomad scheduler receives a job registration request, it needs to determine which clients will run allocations for the job. By default, all nodes are considered for placements but this process can be adjusted by cluster operators and job submitters to achieve more control over where allocations are placed.

The steps to achieve this control consists of setting certain values in clients' configuration and matching them in jobs, and so it's helpful to consider how clients will be configured based on your expected job registration patterns when planning your cluster deployment.

There are several options that can be used depending on the desired outcome.

Affinities and Constraints

Affinities and constraints allow users to define soft or hard requirements for

their jobs. The affinity block specifies a soft requirement

on certain node properties, meaning allocations for the job have a preference

for some nodes, but may be placed elsewhere if the rules can't be matched,

while the constraint block creates hard requirements and

allocations can only be placed in nodes that match these rules. Job placement

fails if a constraint cannot be satisfied.

These rules can reference intrinsic node characteristics, which are called node attributes and are automatically detected by Nomad, static values defined in the agent configuration file by cluster administrators, or dynamic values defined after the agent starts.

Using affinities and constraints has the downside of only allowing allocations to gravitate towards certain nodes, but it does not prevent placements of jobs where the rules don't apply.

Use affinities and constraints when certain jobs have certain node preferences but is acceptable to have other jobs sharing the same nodes.

The sections below describe the node values that can be configured and used in job affinity and constraint rules.

Node Class

Node class is an arbitrary value that can be used to group nodes based on some

characteristics, like the instance size or the presence of fast hard drives,

and is specified in the client configuration file using the

node_class parameter.

Dynamic and Static Node Metadata

Node metadata are arbitrary key-value mappings specified either in the client

configuration file using the meta parameter or

dynamically via the nomad node meta command and the

/v1/client/metadata API endpoint.

There are no preconceived use cases for metadata values, and each team may

choose to use them in different ways. Some examples of static metadata include

resource ownership, such as owner = "team-qa", or fine-grained locality,

rack = "3". Dynamic metadata may be used to track runtime information, such

as jobs running in a given client.

Datacenter

Datacenters represent a geographical location in a region that can be used for fault tolerance and infrastructure isolation.

It is defined in the agent configuration file using the

datacenter parameter and, unlike affinities and

constraints, datacenters are opt-in at the job level, meaning that a job only

places allocations in the datacenters it uses, and, more importantly, only jobs

in a given datacenter are allowed to place allocations in those nodes.

Given the strong connotation of a geographical location, use datacenters to

represent where a node resides rather than its intended use. The

spread block can help achieve fault tolerance across datacenters.

Node Pool

Node pools allow grouping nodes that can be targeted by jobs to achieve workload isolation.

Similarly to datacenters, node pools are configured in an agent configuration

file using the node_pool attribute, and are opt-in

on jobs, allowing restricted use of certain nodes by specific jobs without

extra configuration.

But unlike datacenters, node pools don't have a preconceived notion and can be used for several use cases, such as segmenting infrastructure per environment (development, staging, production), by department (engineering, finance, support), or by functionality (databases, ingress proxy, applications).

Node pools are also a first-class concept and can hold additional metadata and configuration.

Use node pools when there is a need to restrict and reserve certain nodes for specific workloads, or when you need to adjust specific scheduler configuration values.

Refer to the Node Pools concept page for more information.

Getting in Depth

This has been a brief high-level overview of the architecture of Nomad. There are more details available for each of the sub-systems. The consensus protocol, gossip protocol, and scheduler design are all documented in more detail.

For other details, either consult the code, open an issue on GitHub, or ask a question in the community forum.